Abstract: A convolutional neural network (CNN) is one of the machine learning models that achieve excellent success in recognizing human facial expressions. Technological developments have given birth to many optimizers that can be used to train the CNN model. Therefore, this study focuses on implementing and comparing 14 gradient-based CNN optimizers to classify facial expressions in two datasets, namely the Advanced Computing Class 2022 (ACC22) and Extended Cohn-Kanade (CK+) datasets. The 14 optimizers are classical gradient descent, traditional momentum, Nesterov momentum, AdaGrad, AdaDelta, RMSProp, Adam, Radam, AdaMax, AMSGrad, Nadam, AdamW, OAdam, and AdaBelief. This study also provides a review of the mathematical formulas of each optimizer. Using the best default parameters of each optimizer, the CNN model is trained using the training data to minimize the cross-entropy value up to 100 epochs. The trained CNN model is measured for its accuracy performance using training and testing data. The results show that the Adam, Nadam, and AdamW optimizers provide the best performance in model training and testing in terms of minimizing cross-entropy and accuracy of the trained model. The three models produce a cross-entropy of around 0.1 at the 100th epoch with an accuracy of more than 90% on both training and testing data. Furthermore, the Adam optimizer provides the best accuracy on the testing data for the ACC22 and CK+ datasets, which are 100% and 98.64%, respectively. Therefore, the Adam optimizer is the most appropriate optimizer to be used to train the CNN model in the case of facial expression recognition.

Keywords: AlexNet architecture, confusion matrix, convolutional neural network, deep learning, facial expression recognition, gradient-based optimizer

link: 10.30598/barekengvol16iss3pp927-938

Dipublikasikan pada BAREKENG: Jurnal Ilmu Matematika dan Terapan, Vol. 16(3): 927-938.

.PNG)

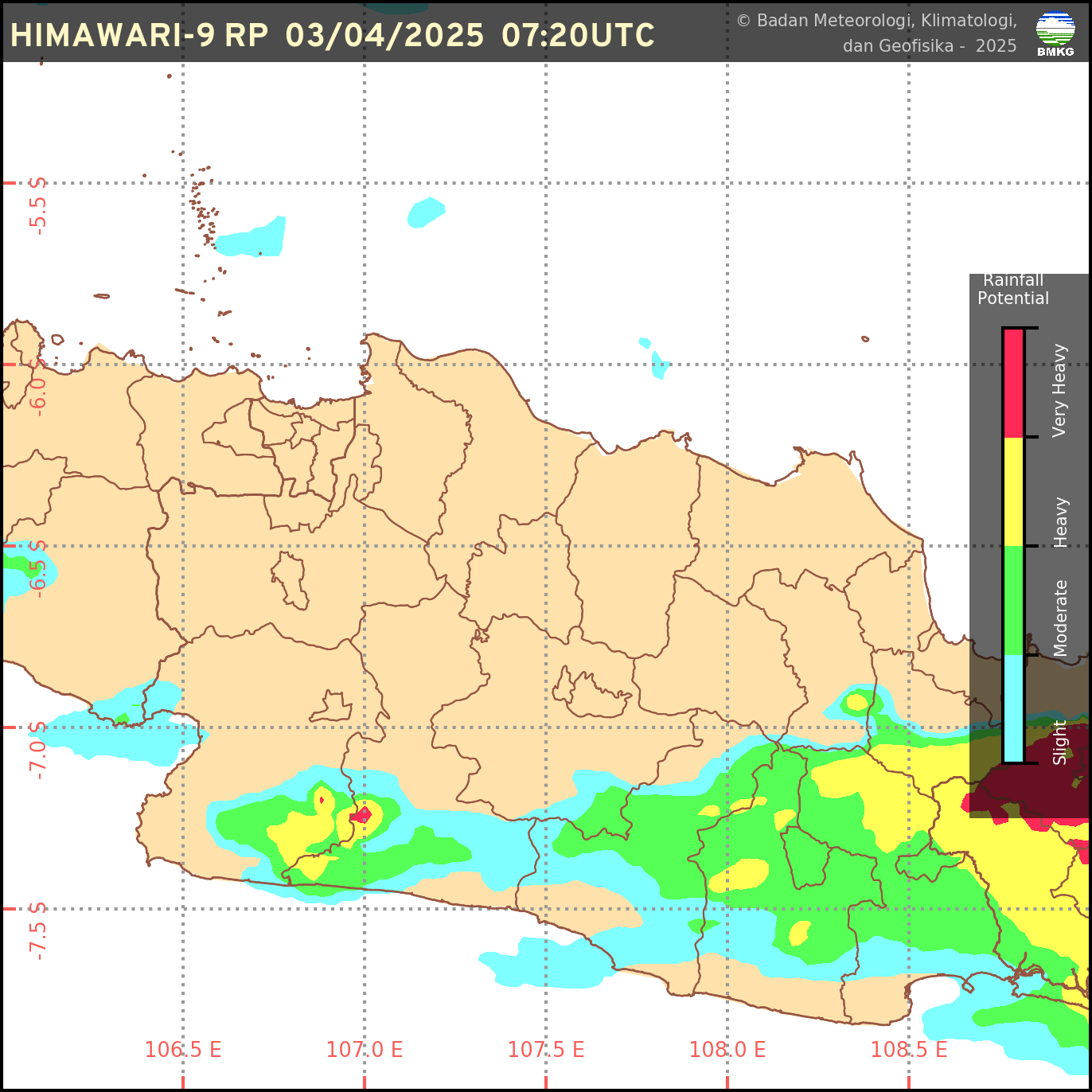

Sumber: BMKG

Sumber: BMKG

0 Komentar