Authors: MK Najib, A Irawan, FN Salsabilla, S Nurdiati

Abstract: In this digital era, artificial intelligence has become very popular due to its very wide scope of application. Various models and methods in artificial intelligence are developed with their respective purposes. However, each model and method certainly requires a reliable optimizer in the training process. Many optimizers have been developed and are increasingly reliable lately. In this article, we classify the synopsis texts of several movies into nine different genre classes, leveraging Natural Language Processing (NLP) with Long Short Term Memory (LSTM) and Embedding to build models. Models are trained using several optimizers, including stochastic gradient descent (SGD), AdaGrad, AdaDelta, RMSProp, Adam, AdaMax, Nadam, and AdamW. Meanwhile, various metrics are used to evaluate the model, such as accuracy, recall, precision, and F1-score. The results show that the model structure with embedding, lstm, double dense layer, and dropout 0.5 returns satisfactory accuracy. Optimizers based on Adaptive moments provide better results when compared to classical methods, such as stochastic gradient descent. AdamW outperforms other optimizers as indicated by its accuracy on validation data of 89.48%. Slightly behind it are several other optimizers such as Adam, RMSProp, and Nadam. Moreover, the genres that have the highest metric values are the drama and thriller genres, based on the recall, precision and F1-score values. Meanwhile, the horror, adventure and romance genres have low recall, precision and F1-score values. Moreover, by applying Random Over Sampling (ROS) to balance the genre dataset, the model’s testing accuracy improved to 98.1%, enhancing performance across all genres, including underrepresented ones. Additional testing showed the model’s ability to generalize well to unseen data, confirming its robustness and adaptability.

Keywords: embedding; long short term memory; optimizers; sequencing; text analysis; tokenization.

Dipublikasikan pada Indonesian Journal of Mathematics and Applications, vol. 3(1): 1–18.

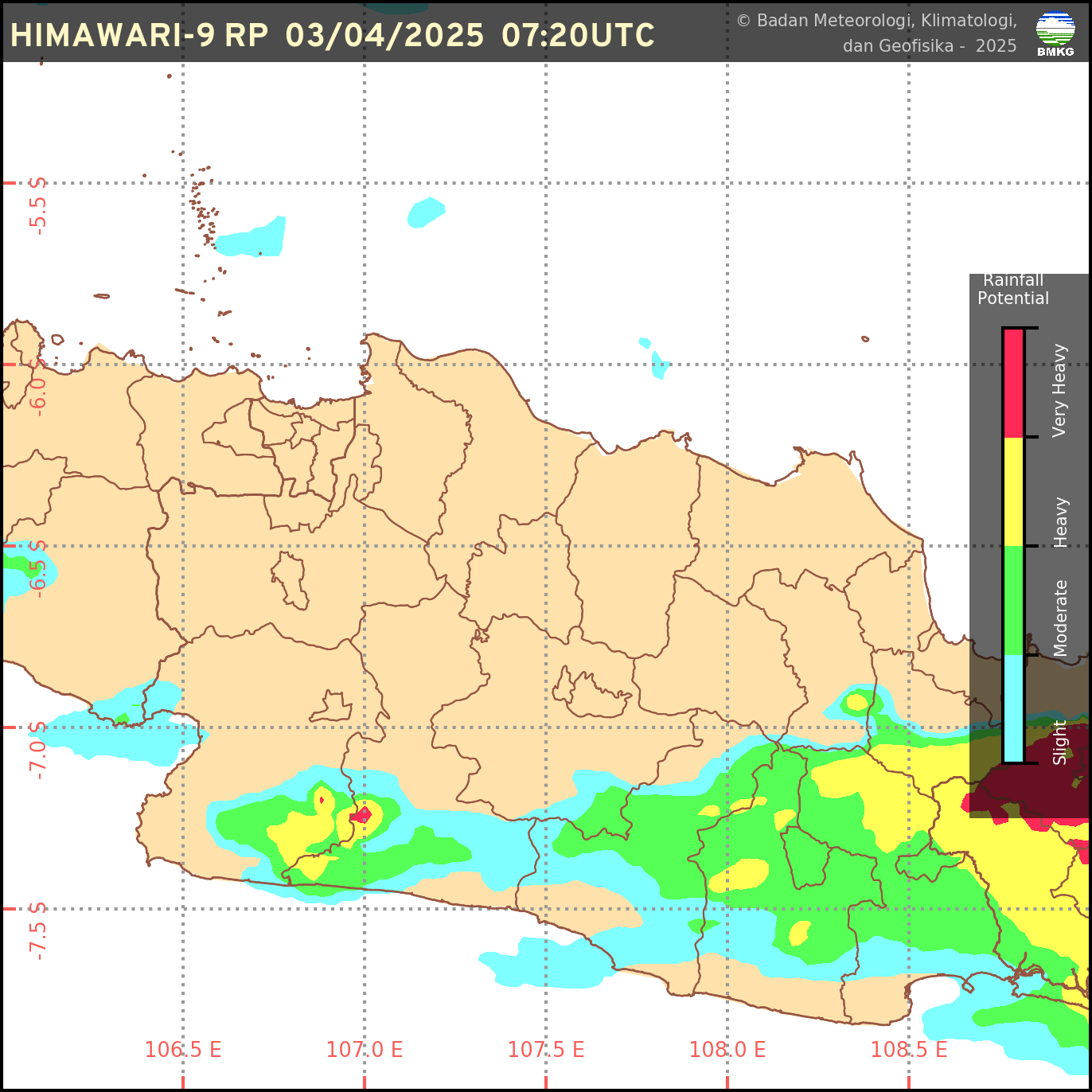

Sumber: BMKG

Sumber: BMKG

0 Komentar